You know what? The most interesting part of crypto right now might be the place it collides with machine learning. Bittensor, powered by the TAO token, sits right at that crossing. It is a decentralized, peer-to-peer network where people build, train, and share machine learning models. Not behind a paywall. Not hidden inside a single company. Out in the open, with rewards tied to performance.

That idea sounds bold. It also feels practical. If models live on a network, they can compete, cooperate, and improve. And if the rewards are native to the protocol, the incentives stay aligned with the work. Simple in spirit, a bit complex underneath, and very crypto at heart.

So, what is Bittensor TAO in plain language?

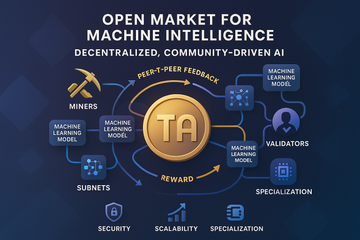

Think of Bittensor like a marketplace for intelligence. On one side, you have people who run models, called miners. They provide answers, embeddings, retrieval, or other useful outputs. On the other side, you have validators. They query models, measure quality, compare results, and route rewards. TAO is the token that keeps score and pays for value created.

The twist is that everything happens on a peer-to-peer network. No central curator. No single company deciding which model matters. Models rise or fall based on real signals, such as accuracy, usefulness, or consistency. That is the pitch.

How the pieces talk to each other

Miners and validators, a quick tour

Miners run models and respond to prompts from validators. They might handle text generation, data filtering, or search. Validators send requests, evaluate replies, and assign weights. Rewards flow to the models that help most, not the loudest ones. It is a feedback loop, built into the protocol.

Because there is no chief editor, validators carry real responsibility. They filter noise, curb spam, and push incentives toward quality. When validators do a good job, the whole subnet improves over time.

Subnets, the neighborhood model

Bittensor is split into subnets. Each subnet targets a task, such as language modeling or retrieval. You can imagine each subnet like a specialty neighborhood. Different rules, different data shapes, same core goal, a fair market for useful model outputs.

Specialization matters here. A subnet can tailor incentives, scoring logic, and interfaces for its niche. That keeps the system flexible without making users learn a new protocol every week.

Incentives, the secret sauce

TAO fuels the network. Miners and validators earn TAO when they contribute useful work. Staking can influence how validators participate and how miners get noticed. The reward schedule encourages steady contribution over time. It is not about a single sprint. It is about sustained quality.

Why decentralization helps AI builders

Centralized AI feels slick, until you hit a wall. Rate limits, closed weights, or shifting terms can slow teams down. In a network like Bittensor, anyone can try a new idea. If your model helps, you get noticed. If it does not, the market tells you fast. Tough but fair.

There is also resilience. If one team stops, the subnet keeps going. If one model fades, another steps in. That redundancy adds a quiet kind of comfort. It is not loud, yet it is valuable.

Where TAO fits for crypto natives

For the crypto crowd, TAO is more than a ticker. It is the unit that measures and pays for intelligence on the network. If you care about open AI markets, TAO represents the right to participate and to steer work toward quality.

Security matters here. If you hold TAO, treat it like any serious asset. Consider cold storage. Many investors prefer hardware wallets for long-term holdings. Ledger and Trezor are popular choices for storing seed phrases and signing transactions offline. Tooling for specific chains can vary, so confirm support before you move funds. Your keys, your rules, and hopefully, your peace of mind.

What can you build on Bittensor?

Plenty. The menu keeps growing. Some classics show up again and again, mainly because they work.

- Language tasks: generation, summarization, translation, and code hints.

- Retrieval and search: fast, relevant lookups over curated datasets.

- Evaluation: models that score other models, a core piece for quality control.

- Data shaping: filtering, deduplication, and privacy checks before training.

- Specialist agents: domain models for law, finance, health research, or gaming.

Some teams stack subnets together. A retrieval subnet feeds a generation subnet. An evaluation subnet keeps them honest. It feels like modular robotics, only the parts are models that talk over a shared protocol.

Let me explain the developer angle

Building in public can be scary. It is also the fastest way to learn. On Bittensor, you can launch a miner, plug into a subnet, and start receiving queries. You can also run a validator, tune your scoring logic, and route rewards toward models that impress you.

Two tips keep coming up. First, start small. Prove a narrow task before you add features. Second, observe. Watch the reward flow and see how your model behaves under real traffic. Feedback from the protocol often beats your gut.

The economics, without the fluff

People like to argue about token supply and charts. Fair. But the day-to-day action is simpler. If the network pays for useful answers, contributors keep showing up. If it tilts toward spam, they leave. That is the essence. Economics becomes an incentive mirror. It reflects where the value sits.

The beauty of peer scoring is that it feels continuous. Not a quarterly report. Not a marketing slide. Just a stream of signals that says yes or no. Over time, quality compounds. That is the hope, and it is not a bad one.

Upsides, risks, and honest wrinkles

It is easy to cheer. It is harder to name the rough edges. So let us name a few.

- Quality drift: without tight guardrails, some models chase rewards with shortcuts. Good validation design helps, but it is a moving target.

- Data sensitivity: contributors must watch what data they use. Licensing, privacy, and provenance matter. The network rewards skill, not shortcuts with hidden costs.

- Compute pressure: training is expensive. Efficient architectures and clever caching can soften the load. Still, you feel the bill.

- Coordination: subnets need social glue. Clear specs, helpful docs, and transparent updates make a difference.

There is also a strange paradox. Open markets reduce gatekeeping, which invites experimentation. That same openness demands stronger filters to preserve quality. It looks like a contradiction. In practice, it pushes the network to get better at measuring value.

How it compares with the usual AI stack

Centralized platforms win on convenience. Fast setup. Clean dashboards. Strong support. Bittensor trades some of that comfort for openness and shared ownership. If your team craves control and composability, the trade is worth it. If you just need a polished API for a short sprint, a hosted model might still be the easier call. No shame in that.

There are other crypto AI projects too. Some focus on agent networks, others on compute markets, and some on data exchanges. Bittensor’s angle is a live market for model outputs. That niche feels clear and, honestly, pretty useful.

Practical steps to get started

If you want to test the waters, keep it simple. Run one component, study rewards, then adjust. Repeat. A few habits help newcomers land on their feet.

- Read the docs: check setup guides, subnet specs, and examples. Keep notes.

- Pick a narrow task: win one lane, such as short summaries or structured answers.

- Model hygiene: track prompts, seed your tests, and log failures. Boring, but powerful.

- Secure your keys: use a hardware wallet like Ledger or Trezor for long-term storage when supported. Test small transactions first.

- Join the chat: community channels often reveal real pain points and quick fixes.

One more thing. Monitor cost. If a tweak doubles your score but triples your compute, that is not progress. Seek steady gains you can keep paying for.

Where this could go next

The AI crowd keeps moving fast. Smaller, smarter models look better every month. Retrieval improves. Tool use gets sharper. In that setting, a market that rewards measurable performance feels timely.

We might see subnets tuned for safety checks, code reasoning, or high-stakes retrieval. We might see more cross-subnet pipelines. Quality evaluation could mature into its own craft, with validators as respected curators. None of this requires a single boss. It just needs clear signals and fair payouts.

A quick word on culture and timing

Crypto loves open systems. Machine learning loves rapid iteration. Put them together, and you get a culture that ships in public, argues in channels, and ships again. It can be messy. It is also fun if you like building with the lights on.

Seasonally, interest in AI tokens moves with hype cycles. That is normal. Underneath the noise, the question is steady. Does the network pay for real value. If yes, builders stay. If not, they move on. Markets are simple like that, even when the models are not.

Final take

Bittensor TAO aims for a clear goal. Make a home for open machine intelligence, and let the market reward what works. It is part protocol, part social contract, and part engineering puzzle. Some days it will feel smooth. Some days it will feel like herding cats. That is the deal with live systems.

If you care about crypto and AI together, this is worth a look. Start with one subnet. Test a miner. Observe the reward flow. Keep your keys safe with tools you trust, like Trezor or Ledger. Learn what the market likes, then give it more. Simple principle. Not always easy. Often rewarding.